Why AI is Transforming India’s Banking Sector

While AI as a concept has been around for quite a while, the emergence of generative AI in the past decade has seen various industries transformed because of the multitude of benefits (efficiency, cost savings, new products & services, new revenue streams) it can potentially bring.

This holds especially true for India’s banking industry. According to a recent EY India survey, 78% of financial institutions are currently implementing or planning GenAI integration. Recent industry examples include iPal, an omnichannel bot fielded by ICICI to handle general banking queries from customers and ILA, an interactive live assistant from SBI Cards that aids customers through the process of applying for new credit cards.

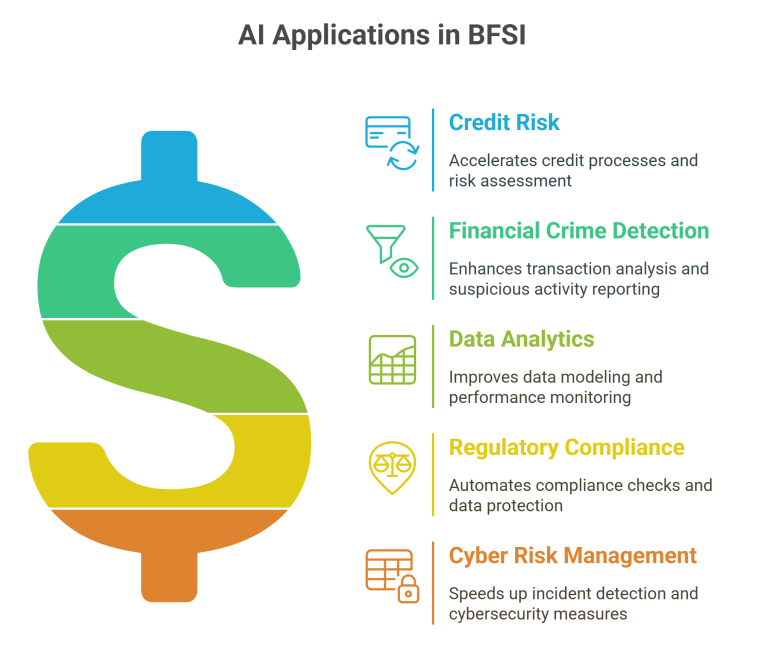

This is becoming an industry-wide trend – while private sector banks have been more proactive in adopting AI, public sector banks are also increasingly recognizing its potential. According to RBI’s comparative analysis of annual reports from 2015–16 to 2022–23, public sector banks increased the use of AI-related terminology more than threefold, while private banks recorded a sixfold rise. Beyond customer service, AI is reshaping core banking functions, from credit risk assessment and financial crime detection to data modelling, compliance, and cybersecurity.

Private vs Public Sector AI Adoption Trends

The business benefits of adopting AI can be considerable. However, the risks of embedding these relatively new technologies into existing systems are often not considered at the outset. If that is the case, businesses may find themselves vulnerable to new challenges that they do not yet know how to deal with. There are several novel risks that come with AI integration:

- Black-box nature: Particularly with deep learning models, it is sometimes challenging to understand the internal workings and decision-making processes of AI models. This ‘black-box’ nature makes it difficult to assess the system’s reliability and could lead to outcomes like inherent bias against particular groups of users, intellectual property infringement concerns from model usage of Internet data and privacy concerns from potential unauthorised disclosure of sensitive personal data.

- Risk of inadequate human oversight: Due to this black-box nature, it can lead to over-reliance on these automated systems and difficulties for banks to intervene effectively when required. This creates a compliance challenge especially if AI is processing sensitive customer data, as DPDPA has clear data usage policies for fiduciaries to follow.

- Third-party risks: Banks often work with many different vendors, each with different kinds of AI potentially embedded in their systems. Failure of a single model or provider could disrupt the entire banking operation.

- Malicious AI: The harsh reality is, as organizations move towards adopting AI, the same applies for cyberattackers around the world. By having AI in their arsenal, they can destabilise banks through tactics like identity fraud, rogue trading and market manipulation.

The Cybersecurity Risks of Unchecked AI Integration

AI has simultaneously increased attack frequency and complexity, while lowering the barriers to entry for less skilled attackers. Indian banks are bearing the brunt of this increased frequency – according to Check Point’s recent Threat Intelligence Report, banking & financial institutions in India experienced an average of 2,525 cyberattacks in the last 6 months of 2024, a number significantly higher than the global average of 1,674 per organization. Diversification of attacks through AI is the key reason for this boom:

- They can elevate and accelerate phishing campaigns through seemingly realistic approaches that can target hundreds of people at once.

- They can conduct successful reconnaissance of your system structure for potential areas to inject malware, ransomware and other complex cyberattacks.

- If AI integration is not done properly, it could lead to many zero-days in your systems that attackers could potentially exploit.

- Finally, attacks could be targeted towards compromising AI systems through measures like data poisoning attacks. For banks, this could lead to manipulation of the algorithms used for credit scoring or fraud detection. By jeopardizing system integrity through these tactics, institutions could face significant financial losses & reputational damage as a result.

Four Steps to Align AI with Cybersecurity in BFSI

The beauty of AI is that while cybersecurity measures could be tailored towards protecting it, AI itself could prove to be a major weapon in your cybersecurity strategy. But from where do you start creating this symbiosis?

Here are 4 steps to helping you achieve this crucial balance.

Step 1: Secure AI Development and Deployment

The sync between AI and cybersecurity starts with identifying where you currently are. Either you have zero presence of AI in your systems, which requires a ground up approach to identifying risks and rewards. The more likely option is that AI tech is already implemented in your organization, and you need to assess how to manage security retroactively. Here are some scenarios in the AI adoption cycle that your company could find itself in:

Scenario | Risks Associated |

Unconscious use of AI through product features in existing outsourced tools (ERP, HR, etc.) |

|

Known usage of AI systems & models by your third-party vendors |

|

Your teams have started the process of developing internal AI tools |

|

You are in the experimentation & piloting stage when it comes to integrating AI |

|

You are in the process of rolling out & integrating AI systems into live operations |

|

You have multiple, disparate AI projects across your organization |

|

Step 2: Strengthen Vendor and Data Governance

Deciding whether or not to proceed with an AI project is a process that involves accounting for business impact, feasibility and risk. There are various ways attackers could damage your organization, depending on where you decide to deploy AI:

Functions | Potential Attacks |

AI Models |

|

AI Model Inputs |

|

AI Model Outputs |

|

AI Model Training |

|

Monitoring & Logging |

|

Directly supporting AI infrastructure |

|

Step 3: Build Cross-Functional AI Risk Teams

Once you have decided to move forward, you must combine pre-deployment security measures being placed at the outset (‘shift left’) with post-deployment measures that ensure resilience & recovery of systems in use (‘expand right’). Here are some examples of best-in-class practices for each strategy:

Shift Left | Expand Right |

Data management protocols to safeguard sensitive data and prevent collection of unnecessary data | Secure access control for data protection in the form of phishing-resistant MFA or strong PAM |

Embedded alerting & monitoring mechanisms that set baselines for user & system behavior, so that immediate alerts can be generated if there is any deviation from these parameters | End-to-end incident response programs that can bring immediate action whenever an incident occurs – AI can be used for monitoring these systems and providing more accurate threat detection |

Setting unique identifiers to effortlessly classify all the data involved | Having security governance measures in place to monitor all third-parties |

Having a backup architecture that promotes instant recovery in the case of an incident | Training of staff to determine what kind of data should be shared with these models |

Step 4: Align with India’s Regulatory Expectations

AI is at a stage where it is evolving on a daily basis. Therefore, shift left, expand right should not be a one-time exercise but rather a continuous process that changes based on the AI landscape. To create this culture, your whole organization must be in sync with these best practices:

- A cross-disciplinary AI risk function must be formulated with members from your legal, compliance, HR, ethics and front-line business teams

- A running inventory of AI applications must be maintained that helps you access how & where AI is being used in your organization at any given point, including whether it is involved in mission-critical apps

- Adequate discipline must be maintained when transitioning from AI experimentation to operational use

- Information governance policies should be established, that dictate what kind of data should be shared with AI models

- Complement of tech, people & process-based controls to maintain AI compliance with India’s regulatory environment

As Indian banks race to integrate AI, those who balance innovation with proactive cybersecurity will gain more than protection, they’ll earn trust. Leading financial institutions are already embedding security from design to deployment.

Is your AI strategy secure enough to scale?